Tutorial: First Steps

Here you will train an existing model (denoising autoencoder) on the standard MNIST dataset.

To get up to speed on deep learning, check out a blog post here: Deep Learning 101.

I also recommend setting up Theano to use the GPU to vastly reduce training time.

OpenDeep relies on the creation of three classes:

-

Dataset: you will use a dataset object to act as an interface to whatever data you train with. You can use standard datasets provided, or create your own from files or even in-memory if you pass arrays from other packages like Numpy.

-

Model: the model defines the computation you want to perform.

-

Optimizer: an optimizer takes a model and a dataset, and trains the model's parameters using examples drawn from the dataset. This is a logically separate object to give flexibility to training models as well as the ability to continually train models based on new data.

Example code

Let's say you want to train a Denoising Autoencoder on the standard MNIST handwritten digit dataset. You can get started in just a few lines of code:

# standard libraries

import logging

# third-party imports

from opendeep.log.logger import config_root_logger

import opendeep.data.dataset as datasets

from opendeep.data.standard_datasets.image.mnist import MNIST

from opendeep.models.single_layer.autoencoder import DenoisingAutoencoder

from opendeep.optimization.adadelta import AdaDelta

# grab the logger to record our progress

log = logging.getLogger(__name__)

# set up the logging to display to std.out and files.

config_root_logger()

log.info("Creating a new Denoising Autoencoder")

# grab the MNIST dataset

mnist = MNIST()

# define some model configuration parameters

config = {

"input_size": 28*28, # dimensions of the MNIST images

"hidden_size": 1500 # number of hidden units - generally bigger than input size

}

# create the denoising autoencoder

dae = DenoisingAutoencoder(config)

# create the optimizer to train the denoising autoencoder

# AdaDelta is normally a good generic optimizer

optimizer = AdaDelta(dae, mnist)

# train the model!

optimizer.train()

# test the trained model and save some reconstruction images

n_examples = 100

# grab 100 test examples

test_xs = mnist.getDataByIndices(indices=range(n_examples), subset=datasets.TEST)

# test and save the images

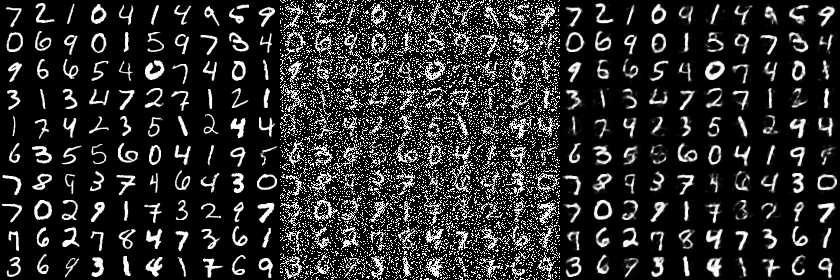

dae.create_reconstruction_image(test_xs)After 318 training epochs with AdaDelta, this is what the reconstructions look like on test images:

Left: original test images.

Center: corrupted (noisy) images.

Right: reconstructed images (output).

Passing data from Numpy/Scipy/Pandas/Array

If you want to use your own data for training/validation/testing, you can pass any array-like object (it gets cast to a numpy array in the code) to a Dataset like so:

# imports

from opendeep.data.dataset import MemoryDataset

import numpy

# create some fake random data to demonstrate creating a MemoryDataset

# train set

fake_train_X = numpy.random.uniform(0, 1, size=(100, 5))

fake_train_Y = numpy.random.binomial(n=1, p=0.5, size=100)

# valid set

fake_valid_X = numpy.random.uniform(0, 1, size=(30, 5))

fake_valid_Y = numpy.random.binomial(n=1, p=0.5, size=30)

# test set (showing you can mix and match the types of inputs - as long as they can be cast to numpy arrays

fake_test_X = [[0.1, 0.2, 0.3, 0.4, 0.5],

[0.9, 0.8, 0.7, 0.6, 0.5]]

fake_test_Y = [0, 1]

# create the dataset!

# note that everything except for train_X is optional. that would be bare-minimum for an unsupervised model.

data = MemoryDataset(train_X=fake_train_X, train_Y=fake_train_Y,

valid_X=fake_valid_X, valid_Y=fake_valid_Y,

test_X=fake_test_X, test_Y=fake_test_Y)

# now you can use the dataset normally when creating an optimizer like other tutorials show!Summary

Congrats, you just:

- set up a dataset (MNIST or an array from memory)

- instantiated a denoising autoencoder model with some configurations

- trained it with an AdaDelta optimizer

- and predicted some outputs given inputs (and saved them as an image)!

Updated 9 months ago