Tutorial: Classifying Handwritten MNIST Images

MNIST: The "hello world" of deep learning

MNIST is a standard academic dataset of binary images of handwritten digits. In this tutorial, we will see how to use a few methods to quickly set up models to classify the images into their 0-9 digit labels:

- The Prototype container model to quickly create a feedforward multilayer perceptron model from basic layers.

- Transform this Prototype into a Model of our own.

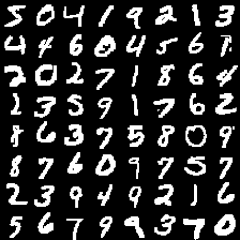

Some MNIST images.

Prototype: Quickly create models by adding layers (similar to Torch)

The opendeep.models.container.Prototype class is a container for quickly assembling multiple layers together into a model. It is essentially a flexible list of Model objects, where you can add a single layer (model) at a time, or lists of models linked in complex ways.

To classify MNIST images with a multilayer perceptron, you only need the inputs, a hidden layer, and the output classification layer. Let's dive in and create a Prototype with these layers!

Updated 7 months ago